Music-Driven Motion Editing

|

mpc33 at cam.ac.uk |

sb329 at cam.ac.uk |

lb282 at cam.ac.uk |

mfh20 at cam.ac.uk |

Pr at cam.ac.uk |

University of Cambridge

Introduction

Sounds are generally associated with motion events in the real world, and as a result there is an intimate connection between the two. Hence, producing effective animations requires the correct association of sound and motion, which remains an essential, yet difficult, task. But unlike prior, application-specific systems such as [Lytle90; Singer97], we address this problem with a general framework for synchronizing motion curves to perceptual cues extracted from the music. The user is able to modify existing motions rather than needing to incorporate unadapted musical motions into animations. An additional fundamental feature of our system is the use of music analysis techniques on complementary MIDI and analog audio representations of the same soundtrack.

System Overview

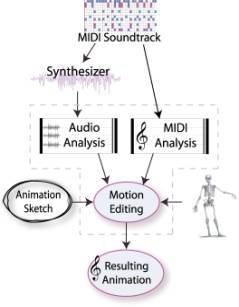

In our system, MIDI and analogue audio features visually influence existing motion curves such as keyframe animation and motion capture. Hence, the system consists of three distinct modules: MIDI, Audio and Motion editing. Along with an initial motion sequence, the output of the two music analysis modules is used by the motion editing module to alter the final motion (Figure 1). Selected music analysis parameters modulate the chosen motion editing methods. These motion alterations are layered to encompass multiple aspects of the music simultaneously. The user-guided mapping process continues until the animator is content with the resulting animation. Our system thereby adapts to user preference as opposed to previous fully automated systems in which the user is required to adapt to the resulting output.

Music Analysis

The system provides a comprehensive selection of music analysis techniques and permits iterative testing of diverse combinations of music and motion editing parameters. An iterative approach is necessary for two reasons: firstly, the animator may not know precisely what they are trying to achieve and require some leeway for experimentation; secondly it may not be obvious which parameter mappings are required to achieve particular effect once it has been decided on. To this end, a range of perceptually significant features need to be extracted from the music. This is partly a process of reducing the overwhelming acoustical information and focusing on aspects that may desirable to translate visually.

Since the conversion from raw MIDI data into a synthesized soundtrack has a crucial influence upon the final perception of the music, we include both MIDI and analogue audio-based features. Examples of such features are pitch induction, chord recognition, segmentation and pattern matching in the MIDI domain; spectral energy, peaks and flux and envelopes are examples of audio-based features. Variations of these features are mapped to variations in the motion.

Motion Editing

When tightly controlled by music analysis, current motion editing techniques offer wide scope for motion control using a variety of standard signal processing tools. For instance, frequency spectrum rescaling, linear and non-linear filtering, can all be made to affect the emotional content of the animation. Filter banks can be used to divide a motion signal into a number of components, which can be manipulated independently according to music parameters and then reassembled to create a new motion signal. Time and displacement warping, multi-resolution editing techniques and multi-target blending provide distinct yet mutually compatible alterations to the motion. Combinations of these operations would be prohibitively complex to carry out manually.

Figure 1: System Overview

Conclusion & Future Work

Early results indicate our system streamlines the workflow of integrating music and animation. Additional work to be completed includes a number of motion editing techniques, as well as a flexible GUI which will allow us to rapidly and extensively test multiple combination of music and motion parameters. Lastly, human motion-specific transforms will be investigated.

References

Lytle, W. 1990. Driving Computer Graphics Animation From A Musical Score. Scientific Excellence in Supercomputing: IBM 1990 Papers.

Singer, E., and Rowe, R., 1997. Two highly integrated real-time music and graphics performance systems. ICMC.