Fast Motion Capture Matching with Replicated Motion Editing

Marc Cardle* Michail Vlachos † Stephen Brooks* Eamonn Keogh† Dimitrios Gunopulos

*University of Cambridge †University of California, Riverside

1 Introduction

The possibility of storing large quantities of human motion capture, or ‘mocap’, has resulted in a growing demand for content-based retrieval of motion sequences without using annotations or other meta-data. We address the issue of rapidly retrieving perceptually similar occurrences of a particular motion in a long mocap sequence or unstructured mocap database for the purpose of replicating editing operations with minimal user-input. One or more editing operations on a given motion are made to affect all similar matching motions. This general approach is applied to standard mocap editing operations such as time-warping, filtering or motion-warping. The style of interaction lies between automation and complete user control.

Unlike recent mocap synthesis systems [1], where new motion is generated by searching for plausible transitions between motion segments, our method efficiently searches for similar motions using a query-by-example paradigm, while still allowing for extensive parameterization over the nature of the matching.

Figure 1 Index Matching. Distance estimations are calculated between the query MBRs and the indexed MBRs, which can be used to efficiently prune potential matches.

|

──────────────── email: { *mpc33 | *sb329 }@cl.cam.ac.uk, { †mvlachos | †eamonn |†dg }@cs.ucr.edu |

2 Motion Capture Matching

The animator selects a particular motion, by specifying its start and end time, and the system searches for similar occurrences in a mocap database (Fig 1). For maximum usability, our mocap matching engine must provide near real-time response to user queries over extended unlabeled mocap sequences, whilst allowing for spatial and temporal deviations in the returned matches. To this end, an unsupervised non-realtime (<10min for 1h of mocap data) pre-processing phase is required to automatically build up an efficient search index capable of guaranteeing no false dismissals. Our novel technique works by splitting up the each joint curve and its associated angular velocities in multi-dimensional Minimum Bounding Rectangles and storing them in an R-Tree. Support for Dynamic Time-warping and Longest Common Subsequence similarity measures caters for noisy motion with variations in the time axis thus producing robust and intuitive correspondence between keyframes. Using our index, we rapidly locate potential candidates within a dynamically user-defined temporal range; that is, matches that are up to k percent shorter or longer. Spatial match precision can also be dynamically altered to selectively retain dissimilar matches. Index updates can also be performed in real-time.

The animator has the crucial ability to interactively select the body areas utilized in the matching, so that, for example, all instances of a grabbing motion are returned, irrespective of the lower body motion. Negative matches can also be defined to preclude certain motions in the returned matches. For instance, if the user selects a kicking motion as a positive example and a punching motion as a negative example, then only matches with a kick, without a simultaneous punch, will be returned. Finally, in the case where many potential matches exist, the query results are clustered to identify the representative medoid of each cluster. These are simultaneously displayed so that the animator can rapidly dismiss undesirable classes of matches.

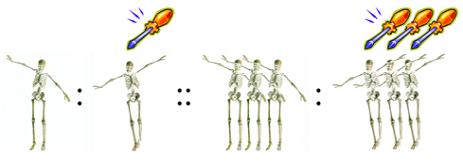

Figure 2 The animator’s motion edit performed on the query motion is automatically replicated over all found matches.

3 Replicated Editing Operations

As well as being a practical and efficient mocap search mechanism, our system can repeat an editing operation performed on the query motion onto all selected matches (Fig 2). For example, one could quickly exaggerate every instance of a punch in the whole database by altering the EMOTE Effort parameters [2], as well as blending in a head-nodding motion. A variety of motion editing operations are supported such as time-warping, motion displacement mapping, motion-waveshaping, blending, smoothing and various other linear and non-linear filtering operations. OK

For certain editing operations, such as displacement mapping, it is preferable to locally tailor the original transformation before applying it to each match. To this end, dynamic time-warping is used to automatically establish the optimal sample correspondence between the query motion and each match. The original transform, such as the displacement map, is then remapped in time according to the calculated warp. This automatic alignment ensures that edits are meaningful and useful across all matches. Additionally, the strength of applied transforms can be made proportional to the match strength, so that dissimilar motion will be less affected by the edits.

4 Conclusion and Future Work

Animators should find this tool particularly valuable since editing motion capture is still very laborious and time consuming. The benefits are especially manifested when dealing with exceedingly long mocap sequences or large mocap databases. Replicated editing might be further improved by investigating alternate ways of locally adapting the original edit, to each motion match.

References

[1] Arikan, K, and Forsyth, D, 2002. Interactive motion generation from examples. ACM SIGGRAPH 2002.

[2] Chi, D, Costa, D, Zhao, L, Badler, N, 2000. The EMOTE model for Effort and Shape. ACM SIGGRAPH 2000.